Paul Barbaste (M.21), Deep into Neuro Science-Fiction

After controlling robots with the power of thought and programming algorithms on the dark web, Paul Barbaste (M.21) is working on a technology that allows tetraplegic individuals to remotely control objects using new models of generative artificial intelligence. An interview with a mind in constant motion.

Alongside neuroscientist Olivier Oullier, Paul Barbaste has developed a brain-machine interface that allows connected objects and environments – whether it’s a workstation or a phone – to adapt to users or even to be controlled solely by thought. Telekinesis? Science fiction? No, but several artificial intelligences at work, months of research, and an alliance between the experience of prominent figures in the field and the entrepreneurial spirit of a 27-year-old inventor.

From Gotham to Star Wars

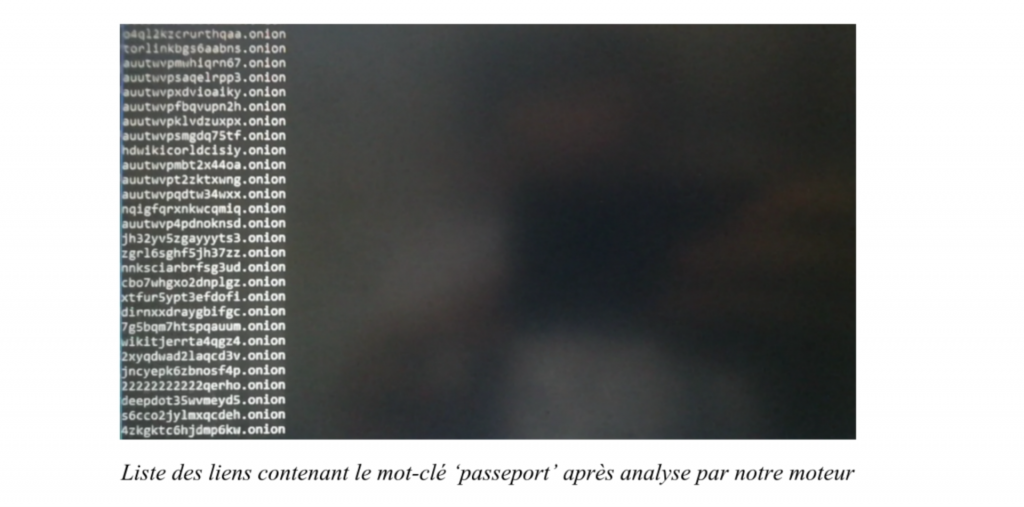

“My mother likes to tell that I started playing with a computer before learning how to read,” says Paul Barbaste, whose interest in computer programming emerged at an early age. Despite being born into a family of engineers, he chose to study at Science Po Strasbourg instead of prestigious engineering schools. He found a job in cybersecurity as an analyst on the side, spending entire days on the dark side of the internet. “The dark web is as unregulated as it is disorganized. Keep in mind that there’s no search engine like Google to navigate it,” he explains.

Tired of dealing with sometimes degrading content on a daily basis, he programmed his own algorithm to retrieve and interpret information based on Natural Language Processing (NLP), “a precursor to AI that is currently at the heart of ChatGPT.” A search tool he named Gotham. With a colleague, he also creates the “Richelieu project,” which compiles a list of the 20,000 most commonly used French passwords and is available on the platform favored by web developers, GitHub.

Terminal of “Gotham,” the search engine for the dark web designed by Paul Barbaste.

In 2018, the science fiction enthusiast – Star Wars in particular – came across a video of a young Brazilian tetraplegic, Rodrigo Hübner Mendes, piloting a Formula 1 car with his thoughts through a brain-machine interface (BMI). Mendes was equipped with brain activity sensors marketed by the California-based company Emotiv, a world leader in neurotech. BMIs became an obsession for Paul. And Emotiv’s president Olivier Oullier would soon become a business partner. Paul mentioned his ambition to his cybersecurity engineer colleagues who then advised him to… let it go. Their argument? “You’re not an engineer.”

“I didn’t want to stop dreaming”

Paul Barbaste, then a student, developing a program to control a robot with his thoughts using an electroencephalogram-equipped headset.

He nevertheless embarked on a project called Jedi, allowing him to control a small BB8 drone – an element from the Star Wars saga – with his thoughts. Capturing brain activity and interpreting it with an algorithm through an interface allowed him to differentiate a neutral state from sending a mental command. “It took me two and a half years to release a first prototype that was halfway functional,” explains Paul Barbaste. “This coincided with the release of the last film in the Skywalker saga.” His project caught the attention of the local press and journalists from national TV channel M6.

“I didn’t want to stop dreaming,” says the young man. “Doctors, merchants, engineers… French society is structured like castes. It’s complicated to have hybrids.” However, he saw an opportunity in the X-Entrepreneur Master program at HEC and École Polytechnique to steer his project towards entrepreneurship and delve into the engineering of artificial intelligence. During his first year, he made contact with the neuroscientist from the video, Olivier Oullier, a former member of the Executive Committee of the World Economic Forum where he led health and healthcare strategy.

The brain-machine interface market has seen an acceleration of acquisitions and investments by FAANG over the past five years. In 2023, Apple and Meta released patents for headphones equipped with electrodes capable of recording brain activity.

We are reaching maturity in the development of our artificial intelligence model that can detect a mental command.

But the use of these cutting-edge tech objects is currently limited to scientific and clinical research, neuromarketing, and gaming. “In almost all smartwatches, you already have sensors that can record heart activity. The new virtual reality headsets from Apple and Meta have eye movement sensors,” says Paul Barbaste. “The next step will be the large-scale integration of brain activity sensors. This will be part of the future of human-machine interactions.”

Innovations for People with Disabilities

The entrepreneur’s project is to make his innovations “accessible to as many people as possible” and to “serve the common good.” In 2022, Paul Barbaste and Olivier Oullier founded Inclusive Brains to offer brain-machine interfaces that make it easier for people with motor disabilities to integrate into the workforce. With the help of the TechLab of the French Association of Paralyzed People, they have the opportunity to interact with tetraplegics who can only communicate through eye blinks. “Very few are equipped,” says Paul, who is also responsible for a training program on neuroscience and neurotechnology at École Polytechnique. “Many innovations emerged from disability. Graham Bell worked on the first telephone prototypes to help his mother who was hearing impaired. The remote control was invented for people unable to move.”

Paul Barbaste activating a robotic exoskeleton using an electrode-equipped headset.

They are currently developing a generative artificial intelligence that transforms neurophysiological measurements into commands to control a computer without the need to move, touch, or speak. “AIs like ChatGPT are language models trained only with text. However, not everything is conveyed through language. Our generative AI is trained with brainwaves and physiological data to come as close as possible to human functioning,” says Paul Barbaste. “Then, our models analyze and classify this information to detect real-time variations in attention or mental load, for example.”

Inclusive Brains has grown with the support of business angels and Bpi France, but has not yet needed to raise significant funds. In late 2023, Inclusive Brains was awarded twice at the Handitech Trophy by Bpi, with one of the awards granted by French Minister of Higher Education and Research Sylvie Retailleau. Partially based at the HEC Incubator, a participant in the HEC Creative Destruction Lab (CDL) program on deep tech at the Innovation & Entrepreneurship Institute, the startup is on Station F Future 40 list and is supported by the CMA-CGM Foundation. The two co-founders ultimately aim for their technology to be used by everyone, with the intention of improving health and safety in work environments.

Published by Estel Plagué